|

Size: 3630

Comment:

|

Size: 50215

Comment:

|

| Deletions are marked like this. | Additions are marked like this. |

| Line 1: | Line 1: |

| = Large block sizes = Today the default is to typically use 4KiB block sizes for storage IO and filesystems. In order to be able to leverage storage hardware more efficiently though it would be ideal to increase the block size both for storage IO and filesystems. This documents tries to itemize the efforts required to address this properly in the Linux kernel. These goals are long term, and while some tasks / problems may be rather easy to address, addressing this completely is a large effort which often times will require coordination with different subsystems, in particular memory, IO, or the filesystem folks. <<TableOfContents(4)>> == Filesystem support for 64KiB block sizes == Filesystems can support 64KiB by allowing the user to specify the block size when creating the filesystem. For instance this can be accomplished as follows with XFS: |

= Large block sizes (LBS) = This page documents the rationale behind why larger block sizes increase over time through different storage technologies to help with larger IO demands and cost, and explains how the Linux kernel is adapting to help support these changes. <<TableOfContents(8)>> == Increasing block sizes over time == The increase in larger block sizes has happened before and is happening with more modern storage technologies. This section provides a brief overview of these advancements. === Increase in block sizes on older storage technologies === As storage capacity demands increase it becomes more important to also reduce the amount of space required to store data to also help reduce cost. Old storage devices used a physical block size of 512 bytes. To help with theoretical boundaries on [[https://idema.org/?page_id=2369|areal density with 512 byte sectors]] storage devices have advanced to with "long data sectors". 1k became a reality and soon after that 4k physical drives followed. === Increase in block sizes on flash storage === With Flash storage technologies the increase in storage capacities is reflected in NAND through [[https://en.wikipedia.org/wiki/Multi-level_cell|multi-level cells]] reducing the number of MOSFETs required to store the same amount of data as single level cells. And so we have SLC, MLC, TLC, QLC and PLC. So for example [[https://www.flashmemorysummit.com/Proceedings2019/08-07-Wednesday/20190807_NVME-201-1_Wasserman.pdf|QLC provides 4 bits per cell, holding 33% more capacity than TLC cells]]. ==== Indirection Unit size increases ==== [[https://www.usenix.org/legacy/event/hotstorage10/tech/full_papers/Arpaci-Dusseau.pdf|In modern SSDs, an indirection map in the Flash Translation Layer (FTL) enables the device to map writes in its virtual address space to any underlying physical location]]. Users use LBAs to interact with drives and the storage FTL will use its indirection map for logical to physical (L2P) mapping arranged in a specific configuration to help map specific Logical Page Number (LPN) to Physical Page Number (PPN). This mapping construct is referred to as '''Indirection Unit (IU)'''. The entire mapping table is kept in storage controller DRAM, the smaller the mapping table the less amount of DRAM needed and therefore the smaller the cost to end users. A '''4k IU''' has sufficed for many years but [[https://www.colfax-intl.com/downloads/intel-achieving-optimal-perf-iu-ssds.pdf|as storage drive capacities increase it becomes increasingly necessary to embrace larger IUs to reduce SSD cost]]. An example is the [[https://semiconductor.samsung.com/news-events/tech-blog/next-generation-qlc-v-nand-increases-data-center-profitability/|Samsung BM1743 61.45 TiB]] and the [[https://www.solidigm.com/products/data-center/d5/p5336.html|Solidigm D5-P5336]] which have a '''16k IU'''. It is already well known that [[https://www.flashmemorysummit.com/Proceedings2019/08-07-Wednesday/20190807_NVME-201-1_Wasserman.pdf|using a 16KiB IU provides benefits for large sequential IOs]]. However, it is important to review what a single 4k write implicates on larger IUs as well. A 4k write on 16k IU implicates a 16k read followed by a 16k write. A Write Amplification Factor ('''WAF''') is defined by what you write over what you actually need to write, so in the worst case for a 16k IU on a 4k IO workload you have can have 16k/4k = 4. In the worst case a 4k write on a 16k IU drive means we're increasing WAF by a factor of 4. Research published by the team at Micron presented by Luca Bert at this year's 2023 Flash Memory Summit suggests that real workloads end up aligning most writes to the IU though, and in practice the WAF is actually much smaller. Luca's team summarizes that: * Using a 16k IU provides 75% DRAM savings * Using a 16k IU on a series of real workloads, although expectations is a WAF to be around 4, the WAF observed less than 2, and sometimes close to 1 * The data shows that there is a relationship between larger writes and lower WAF * If you are using a 16k IU and you were to count number of IOs for each workload at random points in time and compute WAF, you'd expect that the worst WAF would occur in cases where large IO count would happen at 4k but empirical observations reveals that it is not the IO count that matters, it is the '''IO volume''' contribution each IO contributes to a workload. ==== IU size increase implications on Linux ==== Although more works should be done to independently verify Micron's finding the adoption of larger IUs for QLC seems inevitable given products are already shipping with them. Storage vendors serious about large capacity QLC drives are embracing either 16k UI already or are looking towards larger IUs. How Linux can also adapt to support and take advantage of 16k and larger IUs is part of the purpose of this page. ==== Alignment to the IU ==== As documented in Intel's [[https://www.colfax-intl.com/downloads/intel-achieving-optimal-perf-iu-ssds.pdf|large Indirection Unit white paper]] one of the options to enhance optimal performance and endurance is to align writes to the IU. This can be done with: * Align partition and its sizes to the IU * Use direct IO and use `posix_memalign()` to the IU At the point this white paper was written the possibility to support buffered IO support larger than 4k was not possible as Linux lacked support for pages in the page cache greater than 4k. ==== IO introspection verification ==== ===== OCP NVMe Cloud specification SMART-21 log page ===== The [[https://www.opencompute.org/documents/nvme-cloud-ssd-specification-v1-0-3-pdf|OCP 1.0 NVMe Cloud specification]] refers to the '''SMART Cloud Health Log''', which is ''0xC0'', which is a vendor unique log page. Section 4.8.3 covers the log page guarantees. This log page is 512 bytes. Section 4.8.4 defines attributes which you can find on that log page. The entry for '''SMART-21''' described to be at byte address 143:146 is a data field of 8 bytes described to be the number of writes which are unaligned to the IU: {{{ This is a count of the number of write IOs performed by the device that are not aligned to the indirection unit size (IU) of the device. Alignment indicates only the start of each IO. The length does not affect this count. This counter shall reset on power cycle. This counter shall not wrap. This shall be set to zero on factory exit. }}} Reading this value should not affect performance, and so it can be read before a workload and after a workload to get the number of IOs which are unaligned to the IU. Example usage with nvme-cli: {{{ nvme get-log /dev/nvme1n1 -i 0xc0 -L 0x88 -l 8 Device:nvme1n1 log-id:192 namespace-id:0xffffffff 0 1 2 3 4 5 6 7 8 9 a b c d e f 0000: 3c 21 00 00 00 00 00 00 "<!......" # Force 8k IO fio --name direct-io-8k --direct=1 --rw=write --bs=4096 --numjobs=1 --iodepth=1 --size=$((4096*2)) --ioengine=io_uring --filename /dev/nvme1n1 # Get count after nvme get-log /dev/nvme1n1 -i 0xc0 -L 0x88 -l 8 Device:nvme1n1 log-id:192 namespace-id:0xffffffff 0 1 2 3 4 5 6 7 8 9 a b c d e f 0000: 3d 21 00 00 00 00 00 00 "=!......" # Do the simple math for one count calc ; 0x213d - 0x213c 1 }}} ===== eBPF blkalgn ===== To provide more flexibility, in case you are working with devices which do not support the OCP 0xC0 log page we have alternatives which are flexible but can have an impact on performance as they rely on eBPF. The `blkalgn` tool is being developed using eBPF technology to help monitor the size and alignment of the blocks sent to a device. The tool is currently under development and is expected to be merged into the IOVisor/bcc <<FootNote(https://github.com/iovisor/bcc)>> project. The tool monitors the LBA (Logical Block Address) and size of all I/Os sent from the host to the drive. The graph below represent the memory (in gray) in host base page size chunks, and how the I/Os (in yellow) can be or not aligned to the IU boundaries. The first graph represent the case when the I/O size and alignment exactly match the IU boundaries. In the second graph, the size of the I/O matches the IU size but the I/O is not aligned to the IU boundaries. The last graph, represents the case when the I/O does not match neither in size and alignment. {{attachment:iu-graph.png|width=100}} Below is a quick demonstration of the tool using an `fio` demo workload: - The following `fio` commands demonstrate various block sizes and their respective workloads: {{{ fio -bs=4k -iodepth=1 -rw=write -ioengine=sync -size=4k \ -name=sync -direct=1 -filename=/dev/nvme0n2 -loop 40 fio -bs=8k -iodepth=1 -rw=write -ioengine=sync -size=8k \ -name=sync -direct=1 -filename=/dev/nvme0n2 -loop 60 fio -bs=16k -iodepth=1 -rw=write -ioengine=sync -size=16k \ -name=sync -direct=1 -filename=/dev/nvme0n2 -loop 80 fio -bs=32k -iodepth=1 -rw=write -ioengine=sync -size=32k \ -name=sync -direct=1 -filename=/dev/nvme0n2 -loop 100 fio -bs=64k -iodepth=1 -rw=write -ioengine=sync -size=64k \ -name=sync -direct=1 -filename=/dev/nvme0n2 -loop 100 fio -bs=128k -iodepth=1 -rw=write -ioengine=sync -size=128k \ -name=sync -direct=1 -filename=/dev/nvme0n2 -loop 80 fio -bs=256k -iodepth=1 -rw=write -ioengine=sync -size=256k \ -name=sync -direct=1 -filename=/dev/nvme0n2 -loop 60 fio -bs=512k -iodepth=1 -rw=write -ioengine=sync -size=512k \ -name=sync -direct=1 -filename=/dev/nvme0n2 -loop 40 }}} - Running the `blkalgn` tool will output the block size and alignment distribution for the captured write operations: {{{ tools/blkalgn.py --disk nvme0n2 --ops write ^C Block size : count distribution 0 -> 1 : 0 | | 2 -> 3 : 0 | | 4 -> 7 : 0 | | 8 -> 15 : 0 | | 16 -> 31 : 0 | | 32 -> 63 : 0 | | 64 -> 127 : 0 | | 128 -> 255 : 0 | | 256 -> 511 : 0 | | 512 -> 1023 : 0 | | 1024 -> 2047 : 0 | | 2048 -> 4095 : 0 | | 4096 -> 8191 : 40 |**************** | 8192 -> 16383 : 60 |************************ | 16384 -> 32767 : 80 |******************************** | 32768 -> 65535 : 100 |****************************************| 65536 -> 131071 : 100 |****************************************| 131072 -> 262143 : 80 |******************************** | 262144 -> 524287 : 60 |************************ | 524288 -> 1048575 : 40 |**************** | Algn size : count distribution 0 -> 1 : 0 | | 2 -> 3 : 0 | | 4 -> 7 : 0 | | 8 -> 15 : 0 | | 16 -> 31 : 0 | | 32 -> 63 : 0 | | 64 -> 127 : 0 | | 128 -> 255 : 0 | | 256 -> 511 : 0 | | 512 -> 1023 : 0 | | 1024 -> 2047 : 0 | | 2048 -> 4095 : 0 | | 4096 -> 8191 : 40 |**************** | 8192 -> 16383 : 60 |************************ | 16384 -> 32767 : 80 |******************************** | 32768 -> 65535 : 100 |****************************************| 65536 -> 131071 : 100 |****************************************| 131072 -> 262143 : 80 |******************************** | 262144 -> 524287 : 60 |************************ | 524288 -> 1048575 : 40 |**************** | }}} - The tool can also trace all block commands issued to the block device using the `--trace` argument: {{{ tools/blkalgn.py --disk nvme0n2 --ops write --trace Tracing NVMe commands... Hit Ctrl-C to end. DISK OPS LEN LBA PID COMM ALGN ... nvme0n1 write 16384 5592372 1402 postgres 16384 nvme0n1 write 4096 8131612 1406 postgres 4096 nvme0n1 write 4096 8476298 1407 postgres 4096 nvme0n1 write 4096 5562083 1404 postgres 4096 nvme0n1 write 4096 5562081 1404 postgres 4096 nvme0n1 write 8192 5740024 977 postgres 8192 nvme0n1 write 8192 8476258 1407 postgres 8192 nvme0n1 write 16384 2809028 1400 postgres 16384 nvme0n1 write 8192 9393492 1402 postgres 8192 nvme0n1 write 16384 1777248 1401 postgres 16384 nvme0n1 write 8192 11376510 1404 postgres 8192 nvme0n1 write 4096 11376508 1404 postgres 4096 nvme0n1 write 8192 1022036 1405 postgres 8192 nvme0n1 write 4096 5755970 977 postgres 4096 nvme0n1 write 16384 1777252 1401 postgres 16384 nvme0n1 write 4096 9393589 1402 postgres 4096 nvme0n1 write 4096 2809039 1400 postgres 4096 nvme0n2 write 8192 9637351 1403 postgres 4096 nvme0n1 write 4096 5822235 1406 postgres 4096 }}} More details can be found in the development repository <<FootNote(https://github.com/dkruces/bcc; branch: blkalgn)>> and the pull request <<FootNote(https://github.com/iovisor/bcc/pull/4813)>> of the tool to the IOVisor/bcc project. A version of the tool with capturing capabilities is also being explored and is currently hosted in the same development tree, branch: blkalgn-dump. ==== Supporting buffered IO for larger IUs ==== Direct IO writes means your software will be in charge of doing proper software alignment. This can be done transparently for you by supporting larger block sizes in the page cache aligned to the IU. Although Linux used to not support larger page sizes greater than 4k on x86_64 on the page cache the conversion of the page cache from using a custom radix tree to xarray allows the page cache now to use larger page sizes greater than 4k through folios. This also paves the way to support block sizes greater than the page size (`bs > ps`), and so block sizes greater than 4k. By leveraging the use of xarray to support `bs > ps` we can leverage support for block sizes aligned to the larger IUs and therefore allow the page cache to deal with our read modify writes for IO smaller than the IU in memory. All IO writes can therefore be completely aligned to the IU. == Linux support for larger block sizes == Linux has supported larger block sizes on both filesystems and bock devices when the `PAGE_SIZE` is also large for years. Supporting a blocksize > `PAGE_SIZE`, or `bs > ps` for short, has required much more work and is the main focus of this page. This page focuses on the `bs > ps` world when considering large block sizes for filesystems and storage devices. This page tries to itemize and keep track of progress, outstanding issues, and the ongoing efforts to support `bs > ps` (`LBS`) on Linux. Addressing this is a large endeavor requiring coordination with different subsystems: filesystems, memory & IO. == Filesystem and storage LBS support == Linux filesystems have historically supported limiting their own supported max data blocksize size through the CPU's and operating system `PAGE_SIZE`. The page size for x86 has been 4k since the i386 days. But Linux also has supported architectures whith page sizes greater than 4k for years. Examples are Power PC with `CONFIG_PPC_64K_PAGE`, ARM64 with `CONFIG_ARM64_64K_PAGES`. In fact, some architectures such as ARM64 can support different page sizes. The XFS filesystem, for example, has supported a up to 64k blocksize for data for years on ppc64 systems with `CONFIG_PPC_64K_PAGE`. Some RAID systems are known to have existed and sold. To can create a 64k blocksize filesystem you can use: |

| Line 15: | Line 237: |

| In this example 64 KiB block size is used. This would correspond to the kdevops respective XFS section to test xfs_bigblock. But in order to be able to use use and test this the architecture underneath must also support 64KiB page sizes. Example of these are: * ARM64: Through CONFIG_ARM64_64K_PAGES * PowerPC: Through CONFIG_PPC_64K_PAGE The x86_64 architecture still needs work to support this. What a filesystem needs to do to support this is to compute the correct offset in the file to the corresponding block on the disk. This is obfuscated by the get_block_t API. When CoW is enabled though this gets a bit more complicated due to memory pressure on write, as the kernel would need all the corresponding 64 KiB pages in memory. Low memory pressure create problems. == Storage IO supporting 64 KiB block sizes == A world with a 4 KiB PAGE_SIZE is rather simple due to writeback considerations with memory pressure. If your storage device has a larger block size than PAGE_SIZE, the kernel con only send a write once it has in memory all the data required. In this situation reading data means you also would have to wait for all the data to be read from the drive, you could use something like a bit bucket, however that would mean that data would somehow have to be invalidated should a write come through during say a second PAGE_SIZE read on data on a storage block of twice the PAGE_SIZE. === Using folios on the page cache to help === To address the complexity of writeback when the storage supports larger IO block sizes than the PAGE_SIZE folios should be used as a long term solution for the page cache, to opportunistically cache files in larger chunks, however there are some problems that need to be considered and measured to prove / disprove its value for storage. Folios can be used to address the writeback problem by ensuring that the block size for the storage is treated as a single unit in the page cache. A problem with this is the assumption that the kernel can provide the target block IO size for new allocations over time in light of possible memory fragmentation. An assumption here is that if a filesystem is regularly creating allocations in the block size required for the IO storage device, that the kernel will also then be able to reclaim memory in these block sizes. A consideration here is that perhaps some workloads may end up spiraling down with no allocations being available for the target IO block size. Testing this hypothesis is something which we all in the community could work on. The more memory gets cached using folios the easier it becomes to address problems and contentions with memory using a larger IO block device than PAGE_SIZE. |

The above will work today even on x86 with 4k `PAGE_SIZE`, however mount will only work on a system with at least a 64k `PAGE_SIZE`. Linux XFS was ported from IRIX's version of XFS, and IRIX had support for larger block sizes than what the CPU supported for its `PAGE_SIZE`. We refer to this for short as `bs > ps`. Linux has therefore lacked `bs > ps` support and adding support for `bs > ps` should bring XFS up to parity with the features that IRIX XFS used to support. Supporting `bs > ps` requires proper operating system support and today we strive to make this easier on Linux through the adoption of folios and `iomap`. The rest of this document focuses on the `bs > ps` world when considering LBS for filesystems. Using a large block size on a filesystem does not necessarily mean you need to use a storage device with a large or matching physical block size. The storage device's actual unit of placement of data is referred to a sector sizes. Although XFS has support for 64 block sizes in the filesystem, the XFS sector size today is limited to 32k. Most storage devices today work with a max sector size of 4k. Work to support storage devices with larger sector sizes is also part of this endeavor. === Linux support for LBS === Support for LBS on filesystems and block devices require different types of efforts. Most work required to get filesystem support with `bs > ps` consists of the `page cache` to support buffered IO. The page cache assists filesystems buffered-IO support for LBS by enabling writeback at lower granularity than a block device sector size requires by dealing with changes in memory. If bypassing the page cache, using direct IO, userspace will have to take care to ensure proper alignment considerations when dealing with LBS devices to be larger than what most applications are use to today, that is larger than 4k by using things like `posix_memalign()`. Partitions should be aligned to the LBS sized used. Changes may be required to userspace tools to ensure they always query for the minimum IO and optimal IO, if they have not done that already. Most applications should already be doing this because of the old 512 byte to 4k transition, however applications which rely on static 4k assumptions need to be fixed if relying on direct IO. Testing to ensure all this works properly is ongoing. Supporting LBS for filesystems should be done first, this is done by supporting block sizes greater than 4k but sticking to a 4k sector size to ensure no new IO device is required. A second step would be to support devices which support a larger sector sizes without incurring a larger LBA format, this is possible when the `physical_block_size` is larger than 4k but the LBA format is still 4k, for example. The last step is to work on supporting devices which require a larger LBA format greater than 4k. Filesystems which already support folios and use iomap will require less work. The main focus on this endeavor then is to work on XFS first. === How xarray enables larger folios and bs > ps === The Linux kernel v4.20 release was the first to sport a new key data structure which is used by the page cache which is key to bs > ps enablement, the xarray. The [[https://static.lwn.net/kerneldoc/core-api/xarray.html|Linux kernel Xarray docs]] suffice to get a general understanding of the general goal of xarray in a generic sense, it however does not detail much of the advanced API, rationale for why this enables bs > ps and also does not help us understand the requirements for `bs > ps`. xarray resources: * [[https://www.youtube.com/watch?v=v0C9_Fp-co4|2018 Linux Conf AU xarray talk on YouTube]] - [[https://lwn.net/Articles/745073/|great LWN follow up coverage]] * [[https://www.youtube.com/watch?v=-Bw-HWcrnss|2019 Linux Conf AU xarray talk on Youtube]] The xarray API has two APIs available for users, the simple API and the advanced API. The advanced API is what the page cache uses. Both have support for using an optional feature of xarray called multi index support: `CONFIG_XARRAY_MULTI`. The Kconfig help language for it describes it well: Support for entries which occupy the multiple consecutive indices in the Xarray. This is the key part which enables `bs > ps` on Linux today. ==== Page cache considerations ==== Not everything is as obvious when working with the Linux kernel page cache. A few items listed below highlight some of the not so obvious requirements when working with multi index xarray support for the page cache: * Allocating, and inserting into the page cache is not the only way in which folios become useful for IO. * Truncation always tries to reclaim any unused pages in high order folios * Readahead sizes may vary and readahead sizes are used to advance mapped userspace pages, these have no notion or idea of minimum order requirements today * swap does not support high order folios today * compaction does not support high order folios today * index alignment to page order (covered below) ==== Page cache alignment to min order ==== When adding entries into the page cache we are going to work in multiples of the minimum number of pages supported, that is the `min order`. The page cache does not do this for us, and so we must take care to do the work required to ensure that all higher order folios added for `bs > ps` are added aligned to the `min order`. For example, for min order of 2, so 16k KiB block size, this means we're going to use indices 0, 4, 8 and so on. This is what allows us to mimick in software a `16k PAGE_SIZE` even though the real `PAGE_SIZE` is `4k`. There are two current use cases of trying to use large order folios in the page cache, without considering of bs > ps today: * readahead * iomap buffered writes Both of these add high order folios to the page cache. Soon we expect shmem will also follow this use case for how it uses high order folios. The use case of high order folios happens regardless of whether or not we use `bs > ps`. Both readahead and iomap use a loop to try the max possible high order folio possible for IO, this is without considering `bs > ps`. An implicit rationale in that loop today though is to use high order folios aligned to the index. The rationale for this today is not make changes which are too aggressive, ie, not consume more memory than before. Although it is not a strong requirement to align high order folios to the index, when supporting `bs > ps` this is a strong requirement as it also ensures alignment to the minimum page order. A higher order folio is nothing more than multiplying a high order folio by 2 more than once. And so multiplying 16k by 2 multiple times will keep it aligned to 16k. Alignment to a higher order folio implies alignment to a lower order folio. However when you can start a new high order folio varies if you are working with a min order. Whether or not we want to support unaligned orders at different mapped indexes on our address space is debatable to a certain degree. If we are using a min order 0 then obviously we can move around the address space willy nilly, but even then right now we've decided to opt-in to a few requirements worth clearly documenting: * a) [[https://lore.kernel.org/all/ZH0GvxAdw1RO2Shr@casper.infradead.org/|we skip order 1 since we have deferred_list on the deferred list and so every large order folio must have at least three pages]]. Future LBS patches will remove this restriction * b) to save space we should not allocate high order folios if the min order is 0 but we're not aligned to a higher order folio, [[https://lore.kernel.org/all/ZIeg4Uak9meY1tZ7@dread.disaster.area/|so to save space]] and reduce impact, ie make minimum changes. It would seem b) is something which *may* change in the near future so to make using higher order folios more aggressive when using min order 0. A min order implies we must count by min order number of pages and so by definition the index we use to do an allocation must be aligned to the min order always. We only allocate folios at indexes aligned to the min order as we count in min order pages. A higher order folio than min order by definition is a multiple of the min order, an so by definition if an index is aligned to an order higher than a min order, it will also be aligned to the min order. Note that with these considerations we end up with a max order which could be used at different indexes which does not seem so obvious, for details refer to [[https://github.com/linux-kdevops/lbs-tests/commit/1264f147e9da5af9fa7b6961dc72647fb81217d3|a userspace tool which visualizes this a bit better]]. Patches for a first iteration to support all this page cache work is done and will be posted soon. * [[https://lore.kernel.org/all/20230915183848.1018717-1-kernel@pankajraghav.com/ | [RFC 00/23] Enable block size > page size in XFS]] ==== How folios help LBS ==== To address the complexity of writeback when the storage supports `bs > PAGE_SIZE` folios should be used as a long term solution for the page cache, to opportunistically cache files in larger chunks, however there are some problems that need to be considered and measured to prove / disprove its value for storage. Folios can be used to address the writeback problem by ensuring that the block size for the storage is treated as a single unit in the page cache. A problem with this is the assumption that the kernel can provide the target block IO size for new allocations over time in light of possible memory fragmentation. A hypothesis to consider here is that if a filesystem is regularly creating allocations in the block size required for the IO storage device the kernel will also then be able to reclaim memory in these block sizes. In the worst case some workloads may end up spiraling down with no allocations being available for the target IO block size. Testing this hypothesis is something which we all in the community could work on. The more memory gets cached using folios the easier it becomes to address problems and contentions with memory using `bs > ps`. ==== Folio order count recap ==== Folio sizes are more easily kept track of with the folio order size, this is the power of 2 of the respective PAGE_SIZE. So order 0 is one 2^0 so one PAGE_SIZE folio. Order 2 is 2^2 so `4 * PAGE_SIZE` so 16k. === Block device cache LBS support === Block based storage devices also have an implied block device page cache '''bdev cache'''. The Linux '''bdev cache''' is used to query the storage device's capacity, and provide the block layer access to information about a drive's partitions. A device which has a capacity, or size, greater than 0 by default gets scanned for partitions on device initialization through the respective `device_add_disk()`. The '''bdev cache''' by default today uses the old buffer-heads to talk to the block device for block data given the '''block device page cache''' is implemented as a simple filesystem where each block device gets its own `super_block` with just one inode representing the entire disk. * [[https://lore.kernel.org/all/20230915213254.2724586-1-mcgrof@kernel.org/T/#u | [RFC v2 00/10] bdev: LBS devices support to coexist with buffer-heads]] ==== CONFIG_BUFFER_HEAD implications for block device cache ==== In Linux you can now avoid using buffer-heads on the '''block device cache''' if you only enable filesystems which use `iomap`. Today that effectively means only enabling xfs and btrfs, and disabling most other filesystems such as ext4. That effectively means you disable `CONFIG_BUFFER_HEAD`. Using `iomap` for the '''block device cache''' however is only needed if your device supports a minimum sector size greater than 4k. You can still benefit from LBS and not need `CONFIG_BUFFER_HEAD` to be disabled if your drive has supports 4k IOs. In reality the need is for the storage drive to support IOs to match at least the system `PAGE_SIZE`. ==== block device cache buffer-head and iomap co-existence ==== Today we have the '''block device cache''' either use buffer-heads if `CONFIG_BUFFER_HEAD=y`. Only if `CONFIG_BUFFER_HEAD` is disabled will the '''block device cache''' use iomap. There is no alternative. Support for co-existence, to allow a block device to support LBS devices which only support IOs larger than your system `PAGE_SIZE` but optionally to also block devices which may want to use filesystems which require buffer-heads is underway. Patches for co-existence with iomap and buffer heads for the block device cache exist and will be posted soon. === Block driver support === Not all block drivers are yet equipped to support LBS drives. Each one would need to be modified to ensure they work with LBS. === Filesystem LBS support === In order to provide support for a larger block size a filesystem needs to provide the correct offset-in-file to block-on-disk mapping. Supporting LBS with reflinks / CoW can be more complicated. For example, on memory pressure on write, the kernel would need all the corresponding 64 KiB pages in memory. Low memory pressure could easily create problems synchronization issues. Filesystems provide offset-in-file to block-on-disk mapping through the old buffer-head or newer IOMAP. Synchronization and dealing with LBS atomically is enabled by using folios. ==== LBS with buffer-heads ==== Linux buffer-heads, implemented in fs/buffer.c, provides filesystems a 512 byte buffer array based block mapping abstraction for dealing with block devices and the page cache. Filesystems which use buffer-heads will not be able to leverage LBS support as it was decided at the LSFMM 2023 in Vancouver, Canada. Today most filesystem rely on buffer-heads except xfs. Filesystems which want to benefit from LBS need to be converted over to iomap. btrfs is an example filesystem which is working on converting over to use iomap completely. ==== LBS with iomap ==== The newer '''iomap''' allows filesystems to provide callbacks in a similar way but it was designed instead allow filesystems to simplify the filesystem block-mapping callbacks for each type of need within the filesystem. This allows the filesystem callbacks to be easier to read and dedicated towards each specific need. Filesystems which rely on '''iomap''' can take advantage of LBS support. === LBS with storage devices === Storage devices announce their physical block sizes to Linux by setting the physical block size with call `blk_queue_physical_block_size()`. To help with stacking IO devices and to ensure backward compatibility with older userspace it can announce a smaller logical block size with `blk_queue_logical_block_size()`. ==== LBS with NVMe ==== Large block size support for NVMe can come through different means. ===== LBS NVMe support ===== There are different ways in which NVMe drives can support LBS: * Using optimal performance and atomics: * `npwg` >=16k * `awupf` or `nawupf` >= 16k * Larger than 4k LBA format The `npwg` is Namespace Preferred Write Granularity, if a namespace defines this, it indicates the smallest recommended write granularity in logical blocks for this namespace. It must be <= Maximum Data Transfer Size (MDTS). The `awupf` is Atomic Write Unit Power Fail, it indicates the size of the write operation guaranteed to be written atomically to the NVM across all namespaces with any supported namespace format during a power fail or error condition. If a specific namespace guarantees a larger size than is reported in this field, then this namespace specific size is reported in the `nawupf` field in the Identify Namespace data structure. The `nawupf` is the Namespace Atomic Write Unit Power Fail, it indicates the namespace specific size of the write operation guaranteed to be written atomically to the NVM during a power fail or error condition. ===== LBS with NVMe npwg and awupf ===== OCP 2.0 requires that the NVMe drive Indirection Unit ('''IU''') to be exposed through the `npwg`. NVMe drives which support '''both''' `npwg` of 16k and `awupf` of 16k will be able to leverage support for a 16k physical block size while still supporting a 4k LBA format. A 4k LBA format means your drive will still support backward compatibility with 4k IOs. NVMe sets the logical and physical block size on `nvme_update_disk_info()`. The logical block size is set to the NVMe blocksize. The physical block size is set as the minimum between NVMe blocksize and the NVMe's announced atomic block size (`nawupf` or `awupf`). The `mdts` is the Maximum Data Transfer Size, it indicates the maximum data transfer size for a command that transfers data between memory accessible by the host and the controller. If a command is submitted that exceeds this transfer size, then the command is aborted. ===== The inevitability of large NVMe npwg ===== QLC drives with OCP 2.0 support using large '''IUs''' will naturally already expose and set a matching `npwg`. ===== The inevitability of large NVMe awupf ===== If QLC drives with a larger than 4k '''IU''' will be exposing a larger than 4k `npwg` the only thing left for these drives to do then is to support a matching `awupf` or `nawupf` to support larger atomics. From discussions at [[https://lwn.net/Articles/932900/|LSFMM in 2023 cloud optimizations session]] it was clear that cloud vendors are starting to provide support for these large atomic optimizations already using custom storage solutions. The main rationale for these were to help databases which prefer larger page sizes to having to do double writing, one to the journal and one to the actual tables, avoiding this is known as avoiding '''torn writes'''. Major loud vendor are already supporting large atomics so to enable databases with to support doing a full 16k write and avoiding the [[https://dev.mysql.com/doc/refman/8.0/en/innodb-doublewrite-buffer.html|MySQL double write buffer]]. MySQL can take advantage of large atomics today without any change in the kernel due to the fact that a bio in the Linux kernel for direct IO will not tear an aligned 16k atomic write. Support for custom large atomics for this purpose is acknowledged to exist by cloud vendors such as AWS, GCE, Alibaba. How large should a large atomic can vary, there are storage vendors which already support hundreds of megabytes, and so is natural to expect more storage vendors to also adapt. It was clear from the LSFMM session that more than 64k was desirable. ===== NVMe LBA format ===== NVMe drives support different data block sizes through the different LBA formats it supports. Today NVMe drives with an LBA format with a blocksize greater than the `PAGE_SIZE` are effectively disabled by assigning a disk capacity of 0 using `set_capacity_and_notify(disk, 0)`. Patches for this are complete and will be posted soon. To query what LBA format your drive supports you can use something like the following with `nvme-cli`. {{{ nvme id-ns -H /dev/nvme4n1 | grep ^LBA LBA Format 0 : Metadata Size: 0 bytes - Data Size: 512 bytes - Relative Performance: 0 Best LBA Format 1 : Metadata Size: 0 bytes - Data Size: 4096 bytes - Relative Performance: 0 Best (in use) }}} This is an example NVMe drive which supports two different LBA formats, one where the physical data block size is 512 bytes and another where the data physical block size is 4096 bytes. To format a drive to a format one can use the `nvme-cli` format. For example to use the above LBA format for 4k we'd use: {{{ nvme format --lbaf=1 --force /dev/nvme4n1 }}} ==== LBS Storage alignment considerations ==== Reads and writes to storage drives need to be aligned to the storage medium's physical block size to enhance performance and correctness. To help with this Linux started exporting to userspace block device and partition IO topologies after Linux commit [[https://git.kernel.org/pub/scm/linux/kernel/git/torvalds/linux.git/commit/?id=c72758f33784e5e2a1a4bb9421ef3e6de8f9fcf3|c72758f33784]] ("block: Export I/O topology for block devices and partitions") in May 2009. This enables Linux tools (parted, lvm, `mkfs.*`, etc) to [[https://access.redhat.com/articles/3911611|optimize placement of and access to data]]. Proper alignment is especially important for Direct IO since the page cache is not aligned to assist with lower granularity reads or writes or writeback, and so all direct IO should be aligned to the logical block size otherwise IO will fail. The logical block size then is a Linux software construct to help ensure backward compatibility with older userspace and to also support stacking IO devices together (RAID). ===== Checking physical and logical block sizes ===== Below is an example of how to check for the physical and logical block sizes of an NVMe drive. {{{ cat /sys/block/nvme0n1/queue/logical_block_size 512 cat /sys/block/nvme0n1/queue/physical_block_size 4096 }}} ===== 4kn alignment example ===== Drives which support only 4k block sizes are referred to as using an Advanced Format [[https://en.wikipedia.org/wiki/Advanced_Format|4kn]], these are devices where the physical block size and logical block size are 4k. For these devices it important that applications perform direct IO that is multiple of 4k, this ensures it is aligned to 4k. [[https://access.redhat.com/articles/3911611|Applications that perform 512 byte aligned IO on 4kn drives will break]] ===== Alignment considerations beyond 4kn ===== In light of prior experience in dealing with increasing physical block sizes on storage devices, since modern userspace *should* be reading the physical and logical block sizes prior to doing IO, *in theory* increasing storage device physical block sizes should not be an issue and not require much, if any changes. ===== Relevant block layer historic Linux commits ===== * [[https://git.kernel.org/pub/scm/linux/kernel/git/torvalds/linux.git/commit/?id=e1defc4ff0cf57aca6c5e3ff99fa503f5943c1f1]|e1defc4ff0cf]] ("block: Do away with the notion of hardsect_size"). So the sysfs `hw_sector_size` is just the old name. * [[https://git.kernel.org/pub/scm/linux/kernel/git/torvalds/linux.git/commit/?id=ae03bf639a5027d27270123f5f6e3ee6a412781d|ae03bf639a50]] (block: Use accessor functions for queue limits) * [[https://git.kernel.org/pub/scm/linux/kernel/git/torvalds/linux.git/commit/?id=cd43e26f071524647e660706b784ebcbefbd2e44|cd43e26f0715]] (block: Expose stacked device queues in sysfs) * [[https://git.kernel.org/pub/scm/linux/kernel/git/torvalds/linux.git/commit/?id=025146e13b63483add912706c101fb0fb6f015cc|025146e13b63]] (block: Move queue limits to an embedded struct) * [[https://git.kernel.org/pub/scm/linux/kernel/git/torvalds/linux.git/commit/?id=c72758f33784e5e2a1a4bb9421ef3e6de8f9fcf3|c72758f33784]] (block: Export I/O topology for block devices and partitions) It is worth quoting in full the commit which added the logical block size to userspace: {{{ block: Export I/O topology for block devices and partitions To support devices with physical block sizes bigger than 512 bytes we need to ensure proper alignment. This patch adds support for exposing I/O topology characteristics as devices are stacked. logical_block_size is the smallest unit the device can address. physical_block_size indicates the smallest I/O the device can write without incurring a read-modify-write penalty. The io_min parameter is the smallest preferred I/O size reported by the device. In many cases this is the same as the physical block size. However, the io_min parameter can be scaled up when stacking (RAID5 chunk size > physical block size). The io_opt characteristic indicates the optimal I/O size reported by the device. This is usually the stripe width for arrays. The alignment_offset parameter indicates the number of bytes the start of the device/partition is offset from the device's natural alignment. Partition tools and MD/DM utilities can use this to pad their offsets so filesystems start on proper boundaries. Signed-off-by: Martin K. Petersen <martin.petersen@oracle.com> Signed-off-by: Jens Axboe <jens.axboe@oracle.com> }}} === Experimenting with LBS === As of June 25, 2024 testing LBS still requires out of tree kernel patches. These are available on the kdevops linux-next based branch [[https://github.com/linux-kdevops/linux/tree/large-block-minorder-for-next|large-block-minorder-for-next]]. ==== LBS on QEMU ==== QEMU has been used with the NVMe driver to experiment with LBS. You can experiment with LBS by just using a large physical and logical block size than your `PAGE_SIZE`. QEMU does not allow for different physical and logical block sizes. ==== LBS on brd ==== To create a 64k LBS RAM block device use: {{{ modprobe brd rd_nr=1 rd_size=1024000 rd_blksize=65536 rd_logical_blksize=65536 }}} ==== LBS on kdevops ==== See the [[https://github.com/linux-kdevops/kdevops/blob/master/docs/lbs.md|kdevops LBS R&D]] page for how to use kdevops to quickly get started on experimenting with any of the mentioned components above. === Testing with LBS === kdevops has been extended to support LBS filesystem profiles on XFS. We need to run tests for XFS for LBS and also run tests on blktests. ==== fstests ==== kdevops has been extended to support XFS with block sizes > 4k with different sector sizes. fstests testing has completed and the confidence in testing is high. ===== fstests XFS baseline ===== The results of this work has been establishing a strong baseline to test against, [[https://github.com/linux-kdevops/kdevops/blob/master/docs/xfs-bugs.md|the results of known critical failures without LBS have been reported and kept track on the kdevops XFS bugs page]]. Note that some of these issues are known and not easy to fix, some of them are new and we have provided new fstests test to help reproduce them faster. These issues are not specific to LBS, they are issues part of the upstream kernel with existing 4k block sized filesystems. ===== fstests XFS LBS regressions ===== There are 0 regressions known to exist with LBS patches. ===== Known LBS issues ===== The following device mapper setup with a striped setup with a large agcount of 512 on 64k block size is known to immediately create a corrupted filesystem. This requires further investigation. {{{ dmsetup create test-raid0 --table '0 937680896 striped 2 4096 /dev/nvme0n1 0 /dev/nvme1n1 0' mkfs.xfs -f -b size=64k -d agcount=512 /dev/mapper/test-raid0 -m reflink=0 -l logdev=/dev/sdb12,size=2020m,sectsize=512 }}} == LBS resources == * [[https://www.kernel.org/doc/gorman/html/understand/understand013.html#toc6|Mel Gorman's book on the page cache]] * [[https://lwn.net/Articles/232757/|2007 work by Christoph Lameter to support large block sizes than PAGE_SIZE in the page cache with compound pages]] * [[https://lwn.net/Articles/239621/|2007 initial work of fsblock by Nick Piggin to replace buffer head to support large block sizes announced]] * [[https://lwn.net/Articles/321390/|2009 version of fsblock work posted]] * [[https://lwn.net/Articles/322668/|2009 lwn coverage of fsblock]] * [[https://lore.kernel.org/linux-mm/20140124110928.GR4963@suse.de/|2017 LSFMM discussion over prior large block size efforts and worthiness of talking about it at the summit]] * This is a [[https://lore.khttps//lore.kernel.org/linux-mm/20140123082121.GN13997@dastard/ernel.org/linux-mm/20140123082121.GN13997@dastard/|summary mostly by Chinner's on why Nick and Christoph Lameter's strategy had failed]] * it used high order compound pages in the page cache so that nothing needed to change on the filesystem level in order to support this * Chinner supported revisiting this strategy as we use and support compound pages all over now and also support compaction, and compaction helps with ensuring we can get higher order pages * There is still concern for fragmentation if you start doing a lot of only high order page allocations * Chinner still wondered, if Lameter's approach to modifying only the page cache is used and a page fault means tracking a page to its own pte, why do we need contigous pages? Can't se use discontiguous pages? * Nick Piggin's fsblock strategy rewrites buffer head logic to support filesystem blocks larger than a page size, while leaving the page cache untouched. * Downsides to Nick's strategy is all filesystems would need to be rewritten to use fsblock instead of buffer head * [[https://lwn.net/ml/linux-fsdevel/20181107063127.3902-1-david@fromorbit.com/|Dave Chinner 2018's effort to support block size > PAGE_SIZE]] * [[https://lkml.org/lkml/2021/9/17/55|2021 iomap description as a page cache abstraction]]], fs/iomap already provides filesystems with a complete, efficient page cache abstraction that only requires filesytems to provide block mapping services. Filesystems using iomap do not interact with the page cache at all. And David Howells is working with Willy and all the network fs devs to build an equivalent generic netfs page cache abstraction based on folios that is supported by the major netfs client implementations in the kernel. * [[https://lkml.org/lkml/2021/9/17/55|2021 description of fs/xfs/xfs_buf.c while clarifying while supporting folio design vs an opaque object straegy]], fs/xfs/xfs_buf.c is an example of a high performance handle based, variable object size cache that abstracts away the details of the data store being allocated from slab, discontiguous pages, contiguous pages or vmapped memory. It is basically two decade old re-implementation of the Irix low layer global disk-addressed buffer cache, modernised and tailored directly to the needs of XFS metadata caching. * [[Folio-enabling the page cache|2021 Folio-enabling the page cache]] - enables filesystems to be converted to folios * [[https://lwn.net/Articles/893512/|2022 LSFMM coverage on A memory folio update]] * buffer-head --> iomap, why? Because it was designed for larger block size support in mind * only readahead uses folios today * all current filesystem's write path uses base pages, and it has not been clear when large folios should be used * some filesystems want features like range-locking but this gets complex as you need to lock a page's lock before a filesystem lock * page reclaim can be problematic for filesystems * Wilcox suggested most filesystems already do a good job with writeback and so the kernel's reclaim mechanism may not be needed anymore * afs already removed the writepage() callback * XFS hasn't had a writepage() callback since last summer * hnaz suggests that writepage() call is still there on paper, but it's been neutered by conditionals that rarely trigger in practice. It's also only there for the global case, never called for cgroup reclaim. Cgroup-aware flushers are conceivable, but in practice * folios could increase write amplification as when a page is dirty the entire folio needs to be written the global flushers and per-cgroup dirty throttling have been working well. * [[https://lore.kernel.org/all/20230424054926.26927-1-hch@lst.de/|April 2023 Christoph's patches on enabling Linux without buffer-heads]] == Divide & Conquer work - OKRs for LBS == Here are a set of OKRs to help with folio work & LBS [[https://en.wikipedia.org/wiki/OKR|OKRs]], OKRs are used to help divide & conquer tasks into concrete tangible components we need to complete proper LBS support upstream. Since a lot of this is editing tables, this is maintained on an Google sheet. [[https://docs.google.com/spreadsheets/d/e/2PACX-1vS7sQfw90S00l2rfOKm83Jlg0px8KxMQE4HHp_DKRGbAGcAV-xu6LITHBEc4xzVh9wLH6WM2lR0cZS8/pubhtml|OKRs for LBS]] ---- CategoryDocs |

Large block sizes (LBS)

This page documents the rationale behind why larger block sizes increase over time through different storage technologies to help with larger IO demands and cost, and explains how the Linux kernel is adapting to help support these changes.

Contents

-

Large block sizes (LBS)

- Increasing block sizes over time

- Linux support for larger block sizes

- Filesystem and storage LBS support

- LBS resources

- Divide & Conquer work - OKRs for LBS

Increasing block sizes over time

The increase in larger block sizes has happened before and is happening with more modern storage technologies. This section provides a brief overview of these advancements.

Increase in block sizes on older storage technologies

As storage capacity demands increase it becomes more important to also reduce the amount of space required to store data to also help reduce cost. Old storage devices used a physical block size of 512 bytes. To help with theoretical boundaries on areal density with 512 byte sectors storage devices have advanced to with "long data sectors". 1k became a reality and soon after that 4k physical drives followed.

Increase in block sizes on flash storage

With Flash storage technologies the increase in storage capacities is reflected in NAND through multi-level cells reducing the number of MOSFETs required to store the same amount of data as single level cells. And so we have SLC, MLC, TLC, QLC and PLC. So for example QLC provides 4 bits per cell, holding 33% more capacity than TLC cells.

Indirection Unit size increases

In modern SSDs, an indirection map in the Flash Translation Layer (FTL) enables the device to map writes in its virtual address space to any underlying physical location. Users use LBAs to interact with drives and the storage FTL will use its indirection map for logical to physical (L2P) mapping arranged in a specific configuration to help map specific Logical Page Number (LPN) to Physical Page Number (PPN). This mapping construct is referred to as Indirection Unit (IU). The entire mapping table is kept in storage controller DRAM, the smaller the mapping table the less amount of DRAM needed and therefore the smaller the cost to end users. A 4k IU has sufficed for many years but as storage drive capacities increase it becomes increasingly necessary to embrace larger IUs to reduce SSD cost. An example is the Samsung BM1743 61.45 TiB and the Solidigm D5-P5336 which have a 16k IU.

It is already well known that using a 16KiB IU provides benefits for large sequential IOs. However, it is important to review what a single 4k write implicates on larger IUs as well. A 4k write on 16k IU implicates a 16k read followed by a 16k write. A Write Amplification Factor (WAF) is defined by what you write over what you actually need to write, so in the worst case for a 16k IU on a 4k IO workload you have can have 16k/4k = 4. In the worst case a 4k write on a 16k IU drive means we're increasing WAF by a factor of 4. Research published by the team at Micron presented by Luca Bert at this year's 2023 Flash Memory Summit suggests that real workloads end up aligning most writes to the IU though, and in practice the WAF is actually much smaller. Luca's team summarizes that:

- Using a 16k IU provides 75% DRAM savings

- Using a 16k IU on a series of real workloads, although expectations is a WAF to be around 4, the WAF observed less than 2, and sometimes close to 1

- The data shows that there is a relationship between larger writes and lower WAF

If you are using a 16k IU and you were to count number of IOs for each workload at random points in time and compute WAF, you'd expect that the worst WAF would occur in cases where large IO count would happen at 4k but empirical observations reveals that it is not the IO count that matters, it is the IO volume contribution each IO contributes to a workload.

IU size increase implications on Linux

Although more works should be done to independently verify Micron's finding the adoption of larger IUs for QLC seems inevitable given products are already shipping with them. Storage vendors serious about large capacity QLC drives are embracing either 16k UI already or are looking towards larger IUs. How Linux can also adapt to support and take advantage of 16k and larger IUs is part of the purpose of this page.

Alignment to the IU

As documented in Intel's large Indirection Unit white paper one of the options to enhance optimal performance and endurance is to align writes to the IU. This can be done with:

- Align partition and its sizes to the IU

Use direct IO and use posix_memalign() to the IU

At the point this white paper was written the possibility to support buffered IO support larger than 4k was not possible as Linux lacked support for pages in the page cache greater than 4k.

IO introspection verification

OCP NVMe Cloud specification SMART-21 log page

The OCP 1.0 NVMe Cloud specification refers to the SMART Cloud Health Log, which is 0xC0, which is a vendor unique log page. Section 4.8.3 covers the log page guarantees. This log page is 512 bytes. Section 4.8.4 defines attributes which you can find on that log page. The entry for SMART-21 described to be at byte address 143:146 is a data field of 8 bytes described to be the number of writes which are unaligned to the IU:

This is a count of the number of write IOs performed by the device that are not aligned to the indirection unit size (IU) of the device. Alignment indicates only the start of each IO. The length does not affect this count. This counter shall reset on power cycle. This counter shall not wrap. This shall be set to zero on factory exit.

Reading this value should not affect performance, and so it can be read before a workload and after a workload to get the number of IOs which are unaligned to the IU.

Example usage with nvme-cli:

nvme get-log /dev/nvme1n1 -i 0xc0 -L 0x88 -l 8

Device:nvme1n1 log-id:192 namespace-id:0xffffffff

0 1 2 3 4 5 6 7 8 9 a b c d e f

0000: 3c 21 00 00 00 00 00 00 "<!......"

# Force 8k IO

fio --name direct-io-8k --direct=1 --rw=write --bs=4096 --numjobs=1 --iodepth=1 --size=$((4096*2)) --ioengine=io_uring --filename /dev/nvme1n1

# Get count after

nvme get-log /dev/nvme1n1 -i 0xc0 -L 0x88 -l 8

Device:nvme1n1 log-id:192 namespace-id:0xffffffff

0 1 2 3 4 5 6 7 8 9 a b c d e f

0000: 3d 21 00 00 00 00 00 00 "=!......"

# Do the simple math for one count

calc

; 0x213d - 0x213c

1

eBPF blkalgn

To provide more flexibility, in case you are working with devices which do not support the OCP 0xC0 log page we have alternatives which are flexible but can have an impact on performance as they rely on eBPF.

The blkalgn tool is being developed using eBPF technology to help monitor the size and alignment of the blocks sent to a device. The tool is currently under development and is expected to be merged into the IOVisor/bcc 1 project.

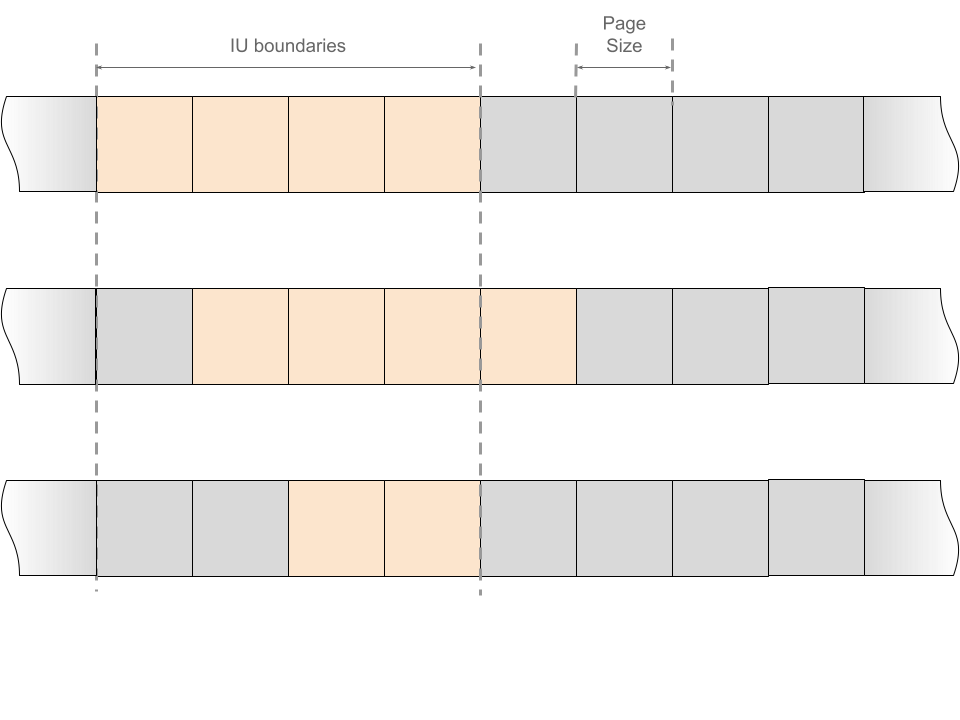

The tool monitors the LBA (Logical Block Address) and size of all I/Os sent from the host to the drive. The graph below represent the memory (in gray) in host base page size chunks, and how the I/Os (in yellow) can be or not aligned to the IU boundaries. The first graph represent the case when the I/O size and alignment exactly match the IU boundaries. In the second graph, the size of the I/O matches the IU size but the I/O is not aligned to the IU boundaries. The last graph, represents the case when the I/O does not match neither in size and alignment.

Below is a quick demonstration of the tool using an fio demo workload:

- The following fio commands demonstrate various block sizes and their respective workloads:

fio -bs=4k -iodepth=1 -rw=write -ioengine=sync -size=4k \

-name=sync -direct=1 -filename=/dev/nvme0n2 -loop 40

fio -bs=8k -iodepth=1 -rw=write -ioengine=sync -size=8k \

-name=sync -direct=1 -filename=/dev/nvme0n2 -loop 60

fio -bs=16k -iodepth=1 -rw=write -ioengine=sync -size=16k \

-name=sync -direct=1 -filename=/dev/nvme0n2 -loop 80

fio -bs=32k -iodepth=1 -rw=write -ioengine=sync -size=32k \

-name=sync -direct=1 -filename=/dev/nvme0n2 -loop 100

fio -bs=64k -iodepth=1 -rw=write -ioengine=sync -size=64k \

-name=sync -direct=1 -filename=/dev/nvme0n2 -loop 100

fio -bs=128k -iodepth=1 -rw=write -ioengine=sync -size=128k \

-name=sync -direct=1 -filename=/dev/nvme0n2 -loop 80

fio -bs=256k -iodepth=1 -rw=write -ioengine=sync -size=256k \

-name=sync -direct=1 -filename=/dev/nvme0n2 -loop 60

fio -bs=512k -iodepth=1 -rw=write -ioengine=sync -size=512k \

-name=sync -direct=1 -filename=/dev/nvme0n2 -loop 40- Running the blkalgn tool will output the block size and alignment distribution for the captured write operations:

tools/blkalgn.py --disk nvme0n2 --ops write

^C

Block size : count distribution

0 -> 1 : 0 | |

2 -> 3 : 0 | |

4 -> 7 : 0 | |

8 -> 15 : 0 | |

16 -> 31 : 0 | |

32 -> 63 : 0 | |

64 -> 127 : 0 | |

128 -> 255 : 0 | |

256 -> 511 : 0 | |

512 -> 1023 : 0 | |

1024 -> 2047 : 0 | |

2048 -> 4095 : 0 | |

4096 -> 8191 : 40 |**************** |

8192 -> 16383 : 60 |************************ |

16384 -> 32767 : 80 |******************************** |

32768 -> 65535 : 100 |****************************************|

65536 -> 131071 : 100 |****************************************|

131072 -> 262143 : 80 |******************************** |

262144 -> 524287 : 60 |************************ |

524288 -> 1048575 : 40 |**************** |

Algn size : count distribution

0 -> 1 : 0 | |

2 -> 3 : 0 | |

4 -> 7 : 0 | |

8 -> 15 : 0 | |

16 -> 31 : 0 | |

32 -> 63 : 0 | |

64 -> 127 : 0 | |

128 -> 255 : 0 | |

256 -> 511 : 0 | |

512 -> 1023 : 0 | |

1024 -> 2047 : 0 | |

2048 -> 4095 : 0 | |

4096 -> 8191 : 40 |**************** |

8192 -> 16383 : 60 |************************ |

16384 -> 32767 : 80 |******************************** |

32768 -> 65535 : 100 |****************************************|

65536 -> 131071 : 100 |****************************************|

131072 -> 262143 : 80 |******************************** |

262144 -> 524287 : 60 |************************ |

524288 -> 1048575 : 40 |**************** |- The tool can also trace all block commands issued to the block device using the --trace argument:

tools/blkalgn.py --disk nvme0n2 --ops write --trace Tracing NVMe commands... Hit Ctrl-C to end. DISK OPS LEN LBA PID COMM ALGN ... nvme0n1 write 16384 5592372 1402 postgres 16384 nvme0n1 write 4096 8131612 1406 postgres 4096 nvme0n1 write 4096 8476298 1407 postgres 4096 nvme0n1 write 4096 5562083 1404 postgres 4096 nvme0n1 write 4096 5562081 1404 postgres 4096 nvme0n1 write 8192 5740024 977 postgres 8192 nvme0n1 write 8192 8476258 1407 postgres 8192 nvme0n1 write 16384 2809028 1400 postgres 16384 nvme0n1 write 8192 9393492 1402 postgres 8192 nvme0n1 write 16384 1777248 1401 postgres 16384 nvme0n1 write 8192 11376510 1404 postgres 8192 nvme0n1 write 4096 11376508 1404 postgres 4096 nvme0n1 write 8192 1022036 1405 postgres 8192 nvme0n1 write 4096 5755970 977 postgres 4096 nvme0n1 write 16384 1777252 1401 postgres 16384 nvme0n1 write 4096 9393589 1402 postgres 4096 nvme0n1 write 4096 2809039 1400 postgres 4096 nvme0n2 write 8192 9637351 1403 postgres 4096 nvme0n1 write 4096 5822235 1406 postgres 4096

More details can be found in the development repository 2 and the pull request 3 of the tool to the IOVisor/bcc project.

A version of the tool with capturing capabilities is also being explored and is currently hosted in the same development tree, branch: blkalgn-dump.

Supporting buffered IO for larger IUs

Direct IO writes means your software will be in charge of doing proper software alignment. This can be done transparently for you by supporting larger block sizes in the page cache aligned to the IU. Although Linux used to not support larger page sizes greater than 4k on x86_64 on the page cache the conversion of the page cache from using a custom radix tree to xarray allows the page cache now to use larger page sizes greater than 4k through folios. This also paves the way to support block sizes greater than the page size (bs > ps), and so block sizes greater than 4k.

By leveraging the use of xarray to support bs > ps we can leverage support for block sizes aligned to the larger IUs and therefore allow the page cache to deal with our read modify writes for IO smaller than the IU in memory. All IO writes can therefore be completely aligned to the IU.

Linux support for larger block sizes

Linux has supported larger block sizes on both filesystems and bock devices when the PAGE_SIZE is also large for years. Supporting a blocksize > PAGE_SIZE, or bs > ps for short, has required much more work and is the main focus of this page.

This page focuses on the bs > ps world when considering large block sizes for filesystems and storage devices. This page tries to itemize and keep track of progress, outstanding issues, and the ongoing efforts to support bs > ps (LBS) on Linux.

Addressing this is a large endeavor requiring coordination with different subsystems: filesystems, memory & IO.

Filesystem and storage LBS support

Linux filesystems have historically supported limiting their own supported max data blocksize size through the CPU's and operating system PAGE_SIZE. The page size for x86 has been 4k since the i386 days. But Linux also has supported architectures whith page sizes greater than 4k for years. Examples are Power PC with CONFIG_PPC_64K_PAGE, ARM64 with CONFIG_ARM64_64K_PAGES. In fact, some architectures such as ARM64 can support different page sizes.

The XFS filesystem, for example, has supported a up to 64k blocksize for data for years on ppc64 systems with CONFIG_PPC_64K_PAGE. Some RAID systems are known to have existed and sold. To can create a 64k blocksize filesystem you can use:

mkfs.xfs -f -b size=65536

The above will work today even on x86 with 4k PAGE_SIZE, however mount will only work on a system with at least a 64k PAGE_SIZE.

Linux XFS was ported from IRIX's version of XFS, and IRIX had support for larger block sizes than what the CPU supported for its PAGE_SIZE. We refer to this for short as bs > ps. Linux has therefore lacked bs > ps support and adding support for bs > ps should bring XFS up to parity with the features that IRIX XFS used to support.

Supporting bs > ps requires proper operating system support and today we strive to make this easier on Linux through the adoption of folios and iomap. The rest of this document focuses on the bs > ps world when considering LBS for filesystems.

Using a large block size on a filesystem does not necessarily mean you need to use a storage device with a large or matching physical block size. The storage device's actual unit of placement of data is referred to a sector sizes. Although XFS has support for 64 block sizes in the filesystem, the XFS sector size today is limited to 32k.

Most storage devices today work with a max sector size of 4k. Work to support storage devices with larger sector sizes is also part of this endeavor.

Linux support for LBS

Support for LBS on filesystems and block devices require different types of efforts.

Most work required to get filesystem support with bs > ps consists of the page cache to support buffered IO.

The page cache assists filesystems buffered-IO support for LBS by enabling writeback at lower granularity than a block device sector size requires by dealing with changes in memory.

If bypassing the page cache, using direct IO, userspace will have to take care to ensure proper alignment considerations when dealing with LBS devices to be larger than what most applications are use to today, that is larger than 4k by using things like posix_memalign().

Partitions should be aligned to the LBS sized used.

Changes may be required to userspace tools to ensure they always query for the minimum IO and optimal IO, if they have not done that already. Most applications should already be doing this because of the old 512 byte to 4k transition, however applications which rely on static 4k assumptions need to be fixed if relying on direct IO.

Testing to ensure all this works properly is ongoing.

Supporting LBS for filesystems should be done first, this is done by supporting block sizes greater than 4k but sticking to a 4k sector size to ensure no new IO device is required.

A second step would be to support devices which support a larger sector sizes without incurring a larger LBA format, this is possible when the physical_block_size is larger than 4k but the LBA format is still 4k, for example.

The last step is to work on supporting devices which require a larger LBA format greater than 4k.

Filesystems which already support folios and use iomap will require less work. The main focus on this endeavor then is to work on XFS first.

How xarray enables larger folios and bs > ps

The Linux kernel v4.20 release was the first to sport a new key data structure which is used by the page cache which is key to bs > ps enablement, the xarray. The Linux kernel Xarray docs suffice to get a general understanding of the general goal of xarray in a generic sense, it however does not detail much of the advanced API, rationale for why this enables bs > ps and also does not help us understand the requirements for bs > ps.

xarray resources:

The xarray API has two APIs available for users, the simple API and the advanced API. The advanced API is what the page cache uses. Both have support for using an optional feature of xarray called multi index support: CONFIG_XARRAY_MULTI. The Kconfig help language for it describes it well: Support for entries which occupy the multiple consecutive indices in the Xarray. This is the key part which enables bs > ps on Linux today.

Page cache considerations

Not everything is as obvious when working with the Linux kernel page cache. A few items listed below highlight some of the not so obvious requirements when working with multi index xarray support for the page cache:

- Allocating, and inserting into the page cache is not the only way in which folios become useful for IO.

- Truncation always tries to reclaim any unused pages in high order folios

- Readahead sizes may vary and readahead sizes are used to advance mapped userspace pages, these have no notion or idea of minimum order requirements today

- swap does not support high order folios today

- compaction does not support high order folios today

- index alignment to page order (covered below)

Page cache alignment to min order

When adding entries into the page cache we are going to work in multiples of the minimum number of pages supported, that is the min order. The page cache does not do this for us, and so we must take care to do the work required to ensure that all higher order folios added for bs > ps are added aligned to the min order. For example, for min order of 2, so 16k KiB block size, this means we're going to use indices 0, 4, 8 and so on. This is what allows us to mimick in software a 16k PAGE_SIZE even though the real PAGE_SIZE is 4k.

There are two current use cases of trying to use large order folios in the page cache, without considering of bs > ps today:

- readahead

- iomap buffered writes

Both of these add high order folios to the page cache. Soon we expect shmem will also follow this use case for how it uses high order folios. The use case of high order folios happens regardless of whether or not we use bs > ps. Both readahead and iomap use a loop to try the max possible high order folio possible for IO, this is without considering bs > ps. An implicit rationale in that loop today though is to use high order folios aligned to the index. The rationale for this today is not make changes which are too aggressive, ie, not consume more memory than before. Although it is not a strong requirement to align high order folios to the index, when supporting bs > ps this is a strong requirement as it also ensures alignment to the minimum page order. A higher order folio is nothing more than multiplying a high order folio by 2 more than once. And so multiplying 16k by 2 multiple times will keep it aligned to 16k. Alignment to a higher order folio implies alignment to a lower order folio. However when you can start a new high order folio varies if you are working with a min order.

Whether or not we want to support unaligned orders at different mapped indexes on our address space is debatable to a certain degree. If we are using a min order 0 then obviously we can move around the address space willy nilly, but even then right now we've decided to opt-in to a few requirements worth clearly documenting:

a) we skip order 1 since we have deferred_list on the deferred list and so every large order folio must have at least three pages. Future LBS patches will remove this restriction

b) to save space we should not allocate high order folios if the min order is 0 but we're not aligned to a higher order folio, so to save space and reduce impact, ie make minimum changes.

It would seem b) is something which *may* change in the near future so to make using higher order folios more aggressive when using min order 0.

A min order implies we must count by min order number of pages and so by definition the index we use to do an allocation must be aligned to the min order always. We only allocate folios at indexes aligned to the min order as we count in min order pages.

A higher order folio than min order by definition is a multiple of the min order, an so by definition if an index is aligned to an order higher than a min order, it will also be aligned to the min order.

Note that with these considerations we end up with a max order which could be used at different indexes which does not seem so obvious, for details refer to a userspace tool which visualizes this a bit better.

Patches for a first iteration to support all this page cache work is done and will be posted soon.

How folios help LBS

To address the complexity of writeback when the storage supports bs > PAGE_SIZE folios should be used as a long term solution for the page cache, to opportunistically cache files in larger chunks, however there are some problems that need to be considered and measured to prove / disprove its value for storage. Folios can be used to address the writeback problem by ensuring that the block size for the storage is treated as a single unit in the page cache. A problem with this is the assumption that the kernel can provide the target block IO size for new allocations over time in light of possible memory fragmentation.

A hypothesis to consider here is that if a filesystem is regularly creating allocations in the block size required for the IO storage device the kernel will also then be able to reclaim memory in these block sizes. In the worst case some workloads may end up spiraling down with no allocations being available for the target IO block size. Testing this hypothesis is something which we all in the community could work on. The more memory gets cached using folios the easier it becomes to address problems and contentions with memory using bs > ps.

Folio order count recap

Folio sizes are more easily kept track of with the folio order size, this is the power of 2 of the respective PAGE_SIZE. So order 0 is one 2^0 so one PAGE_SIZE folio. Order 2 is 2^2 so 4 * PAGE_SIZE so 16k.

Block device cache LBS support

Block based storage devices also have an implied block device page cache bdev cache. The Linux bdev cache is used to query the storage device's capacity, and provide the block layer access to information about a drive's partitions. A device which has a capacity, or size, greater than 0 by default gets scanned for partitions on device initialization through the respective device_add_disk(). The bdev cache by default today uses the old buffer-heads to talk to the block device for block data given the block device page cache is implemented as a simple filesystem where each block device gets its own super_block with just one inode representing the entire disk.

CONFIG_BUFFER_HEAD implications for block device cache

In Linux you can now avoid using buffer-heads on the block device cache if you only enable filesystems which use iomap. Today that effectively means only enabling xfs and btrfs, and disabling most other filesystems such as ext4. That effectively means you disable CONFIG_BUFFER_HEAD.

Using iomap for the block device cache however is only needed if your device supports a minimum sector size greater than 4k. You can still benefit from LBS and not need CONFIG_BUFFER_HEAD to be disabled if your drive has supports 4k IOs. In reality the need is for the storage drive to support IOs to match at least the system PAGE_SIZE.

block device cache buffer-head and iomap co-existence

Today we have the block device cache either use buffer-heads if CONFIG_BUFFER_HEAD=y. Only if CONFIG_BUFFER_HEAD is disabled will the block device cache use iomap. There is no alternative.

Support for co-existence, to allow a block device to support LBS devices which only support IOs larger than your system PAGE_SIZE but optionally to also block devices which may want to use filesystems which require buffer-heads is underway.

Patches for co-existence with iomap and buffer heads for the block device cache exist and will be posted soon.

Block driver support

Not all block drivers are yet equipped to support LBS drives. Each one would need to be modified to ensure they work with LBS.

Filesystem LBS support

In order to provide support for a larger block size a filesystem needs to provide the correct offset-in-file to block-on-disk mapping. Supporting LBS with reflinks / CoW can be more complicated. For example, on memory pressure on write, the kernel would need all the corresponding 64 KiB pages in memory. Low memory pressure could easily create problems synchronization issues.

Filesystems provide offset-in-file to block-on-disk mapping through the old buffer-head or newer IOMAP. Synchronization and dealing with LBS atomically is enabled by using folios.

LBS with buffer-heads

Linux buffer-heads, implemented in fs/buffer.c, provides filesystems a 512 byte buffer array based block mapping abstraction for dealing with block devices and the page cache. Filesystems which use buffer-heads will not be able to leverage LBS support as it was decided at the LSFMM 2023 in Vancouver, Canada. Today most filesystem rely on buffer-heads except xfs. Filesystems which want to benefit from LBS need to be converted over to iomap. btrfs is an example filesystem which is working on converting over to use iomap completely.

LBS with iomap

The newer iomap allows filesystems to provide callbacks in a similar way but it was designed instead allow filesystems to simplify the filesystem block-mapping callbacks for each type of need within the filesystem. This allows the filesystem callbacks to be easier to read and dedicated towards each specific need.

Filesystems which rely on iomap can take advantage of LBS support.

LBS with storage devices

Storage devices announce their physical block sizes to Linux by setting the physical block size with call blk_queue_physical_block_size(). To help with stacking IO devices and to ensure backward compatibility with older userspace it can announce a smaller logical block size with blk_queue_logical_block_size().

LBS with NVMe

Large block size support for NVMe can come through different means.

LBS NVMe support

There are different ways in which NVMe drives can support LBS:

- Using optimal performance and atomics:

npwg >=16k

awupf or nawupf >= 16k

- Larger than 4k LBA format

The npwg is Namespace Preferred Write Granularity, if a namespace defines this, it indicates the smallest recommended write granularity in logical blocks for this namespace. It must be <= Maximum Data Transfer Size (MDTS).

The awupf is Atomic Write Unit Power Fail, it indicates the size of the write operation guaranteed to be written atomically to the NVM across all namespaces with any supported namespace format during a power fail or error condition. If a specific namespace guarantees a larger size than is reported in this field, then this namespace specific size is reported in the nawupf field in the Identify Namespace data structure.

The nawupf is the Namespace Atomic Write Unit Power Fail, it indicates the namespace specific size of the write operation guaranteed to be written atomically to the NVM during a power fail or error condition.

LBS with NVMe npwg and awupf

OCP 2.0 requires that the NVMe drive Indirection Unit (IU) to be exposed through the npwg. NVMe drives which support both npwg of 16k and awupf of 16k will be able to leverage support for a 16k physical block size while still supporting a 4k LBA format. A 4k LBA format means your drive will still support backward compatibility with 4k IOs.

NVMe sets the logical and physical block size on nvme_update_disk_info(). The logical block size is set to the NVMe blocksize. The physical block size is set as the minimum between NVMe blocksize and the NVMe's announced atomic block size (nawupf or awupf).